Surfing the Singularity : Staying Relevant in a Time of Rapid Change

The If you've been tracking the technology industry, and the software space in particular, for any amount of time you've witnessed the accelerating rate of technical change - it was always there, but now its become impossible to miss.

The rate of technological change has seemed exponential for a while now, but recent advancements in AI have pushed this curve to new heights.

An Accenture report released for Davos 2024 suggests that technical rate of change is seen by C-level leaders as the number one most impactful force on their business - more than financial or geopolitical matters - largely as a result of advances in various forms of AI tooling. [1] Of those surveyed, 88% see the rate of change increasing even further, and half say their organizations are not ready, even though 70% see it as a revenue opportunity.

Dinosaur Developers?

Today staying alive in business, especially the business of software engineering, means surfing increasingly turbulent and potentially disruptive waters. Consider the leaked recent remarks of Amazon Web Services CEO Matt Garman, wherein he suggested that a mere 2 years from now most AWS programmers wouldn't be coding. [2] In their Q2 investor call, Amazon cited 4,500 person-years of savings through the use of AI assistants on mostly mundane programming tasks like porting and hardening code with patterns of best practices. [3]

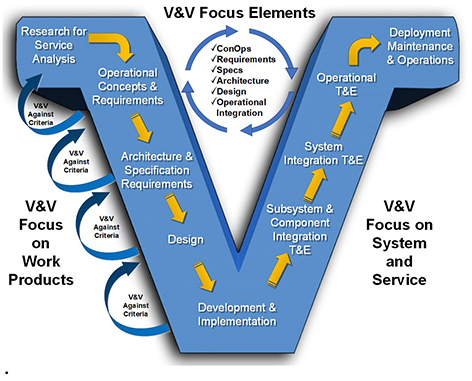

While the International Monetary Fund suggests AI will impact 60% of jobs and increase wealth inequality, the jobs impacted are more likely to be skewed to higher income countries. [4] These remarks from influential leaders in the industry suggest that the impact of AI will be felt most acutely among software practitioners. Those of us who use integrated development environments (IDEs) to write code (and documents, like this one) with AI assist already are familiar with the benefits. For those unwilling to adapt, to retool and upscale their skills, the future might be bleak. Growing might mean zooming out from code to a more soup-to-nuts view of the software engineering process, especially specification and validation - the need to clearly state requirements and validate results without an immediate need to focus on implementation details. Notice that in the below diagram, taken from the Federal Aviation Administration which is increasingly interested in software engineering and model validation, traditional coding sits only at the bottom of the process rendered as a "V". [5]

So how to stay relevant in a rapidly changing world, to stay one step ahead of AI and the algorithmic job reaper? A recent LinkedIn survey of technologists suggests the number one thing a person can do is to learn new technologies. [6]

A recent Gartner report [7] of the 30 most impactful technologies lists quantum computing as a weighty albeit distant critical enabler. Why? For starters, the existence of Shor's quantum-based numerical factoring algorithm means its a matter of when, not if, quantum computers will be used to crack existing military-grade encryption. In the hands of an adversary, especially when unknown as with the Enigma machine in WWII, the results could be catastrophic, and this is a good part of what is fueling the current government interest in quantum computing.

Off to Quantum Summer School

So for me, it was back to school. Summer school. First I hit the stacks, brushed my very stale self up on the fundamentals of the necessary calculus and linear algebra, the quantum mechanics to at least an undergraduate level of understanding, read several texts on the subject of quantum computing including the K&R of quantum "Mike & Ike", consumed mass quantities of videos from companies like IBM and Xanadu, and kicked the tires on their programming tool kits.

Next I traveled a short distance from my home office to the Griffiss Institute in Rome NY at their now annual "Quantum 4 International" conference. This consisted of an impressive array of researchers and government administrators presenting their latest findings and laboratory results, often in a sort of national inventory of funded priority projects. The US Air Force, which maintains a research presence in Rome NY, is particularly interested in quantum computing and networking, for example, scaling up to a larger quantum computer by networking (entangling) a set of smaller ones. The Army and Navy are more focused on other non-computing aspects of quantum technology - sensing, magnetics, material defect identification, and as radio receivers. The Canadian delegation was focused on many of the same research topics, as well as a national emphasis on quantum technology education - to be impactful in quantum computing, one must be able to meld a variety of maths, physics, and programming skills with an unusual level of creativity to design novel and efficient algorithms which take advantage of the power of the quantum qubit - as a former college adjunct, this is no small educational challenge. Finally, researchers from the EU demonstrated new upper bounds on entanglement at a distance for wider area networking, and the use of novel estimation techniques to scale up quantum simulators in this "NISQ" era where real quantum computers are still small, noisy, fragile, and scarce. What was noticeably lacking was the demonstration of any current industrial utility for quantum computing applications, and the head of DARPA saw none emerging until we collectively move beyond the NISQ era.

Pack a Remote Lunch

While some industrial domains like chemistry will likely gain utility first, the head of DARPA suggests that utility in my own current application area - computational fluid dynamics (CFD) - will not emerge until we move into the "cryptographically relevant" era. It was with this in mind that I remotely attended the von Karman Institute for Fluid Dynamics in Belgium for a week-long course called "Introduction to Quantum Computing in Fluid Dynamics" funded by NATO. Entirely civilian in nature, the training was aimed at CFD researchers who might take advantage of one of the quantum facilities being installed at national laboratories in the US and EU, often collocated with their existing high performance computing (HPC) clusters. Not being a physicist, for me much of the class was consumed for general domain literacy, and the "tl;dr" is the re-emergence of particle-based methods like Lattice Boltzmann as a focus of research over finite volume methods and solving the Navier-Stokes equations, as is currently dominant in HPC-based CFD.

With mind fully blown by the Von Karman experience, I next took two weeks and attended the IBM Global Quantum Summer School, 2024 edition, consisting of 10 lectures on a variety of topics and 4 labs. The videos are now posted on YouTube [8] and while I personally enjoyed the lecture on Hamiltonian simulation, there was a distinct and unfortunately NISQ-era necessity to focus on error correction and compensating for noise, and on the inner workings of the IBM Qiskit transpiler. In the latter case, because of the diverse nature of the emerging quantum hardware, because inter-qubit connectivity is often not N-way, and because at this stage things often break, it becomes common to mess with the compiler, and to adopt a toolchain with an eye to portability. Qiskit, a library and tool set for Python, is one of a couple frameworks (another being PennyLane) which currently meet this need, and the labs went to length to expose the student to the various topological mapping, translation, and optimization stages which are present in the quantum programming toolchain. And we got to play with a Hamiltonian simulation up to 50 qubits on real hardware, as most classical machines would have a hard time managing the simultaneous behavior of 50 spins.

Next Up: AI Assistants & Hybrid Quantum Computing

During the Qiskit labs, naturally I was using LLM assist in my IDE, at minimum for tedious or repetitive tasks. But it was remarkable how often the AI assistant was helpful, even for a seemingly niche programming task such as using a quantum computing framework. I intend to delve into this topic more in a future blog and share my experiences with the various emerging AI tools for code and document assist, as well as in the broader end-to-end software engineering context.

In addition, I intend to share future blog installments as my quantum education in search of industrial utility continues through the fall conference season. As a software engineer, I'll be particularly on the lookout for frameworks, including those which leverage AI, which allow the programmer to rise above the level of 1950s-like qubits and gates to higher and portable constructs. I'll also be sharing learnings on the rise of classical-quantum hybrids, especially in HPC contexts, as today's quantum approaches such as variational algorithms which converge on solutions require it. Here is another place where toolchains will play a major role, and where heterogeneous workflows which utilize AI tools will likely be impactful.

Until next time, enjoy these last few weeks of summer.

- andy (linkedin: andygallojr)

References:

0. Photo by Ben Wicks on Unsplash

1. https://www.accenture.com/us-en/about/company/pulse-of-change

2. https://www.businessinsider.com/aws-ceo-developers-stop-coding-ai-takes-over-2024-8

4. https://nypost.com/2024/01/15/business/ai-will-affect-60-of-us-jobs-imf-warns/

5. https://www.faa.gov/sites/faa.gov/files/2024-07/d_VVFlow_2024Mar21.jpg

7. https://www.gartner.com/en/articles/30-emerging-technologies-that-will-guide-your-business-decisions

8. https://www.youtube.com/playlist?list=PLOFEBzvs-Vvr-GzDWlZpAcDpki5jUqYJu

Comments

Post a Comment